Server-Sent Events: How to Build a Chat App with Streaming Response like ChatGPT

Introduction

Imagine if a web page could subscribe to data from the server, all it had to do was establish connection to a certain endpoint, any updates from the server would be sent via the connecton and the client could handle accordingly.

This is what SSE allows, A uni-directional server-client connection where the server can push data (Events) to the client as it chooses. This is in contrast to regular server-client connections wherein the client has to first send a request to recieve response from the server.

The most familiar example of an app that utilizes SSE would be ChatGPTs chat interface where the response from the LLM is streamed to the client. This has mulitple benefits, the most important being the reduced perceived latency as the user need not wait for the whole response to be generated, the LLM will stream characters as it predicts them.

Components of the app

- A Server that can send SSE.

- A Vanilla Js client that will use the native EventSource API to 1. Establish the SSE Connection & 2. Handle incomming events

Let’s build!

The Server

We’ll need a simple API server that has the capability to send SSE. For this demo, we’ll be using FastAPI. While FastAPI doesn’t have any built-ins for SSE, the asgi server it’s based on: starlette does, so we’ll also need sse_starlette.

Setup

mkdir server

cd server

poetry init -y

poetry shell

poetry install fastapi uvicorn["standard"] sse-starletteCode

Let’s start with the basics

server/app.py

from fastapi import FastAPI

from fastapi.responses import Response

app = FastAPI()

@app.get("/")

def root():

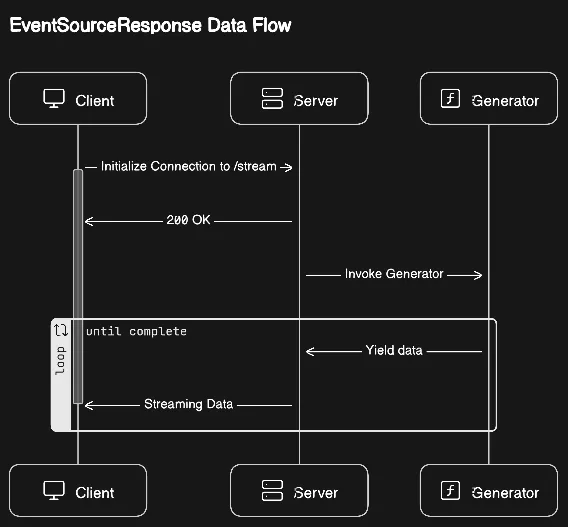

return Response("Hello World")We’ll be using the EventSourceResponse from sse_starlette. It’s usage is simple, we provide an async generator to the content attribute. The response object first returns 200 and then invokes the generator, any value yeilded by the generator is streamed back to the client as Server-Sent Events. Here is a simple sequence chart:

Let’s build the async generator, for this app, the generator will simply yeild characters from a given string pausing for 0.05 seconds b/w each yeild. You can replace this with anything you like. Some examples: streaming response from llms like OpenAI, streaming html snippets etc.

server/app.py

import asyncio

...

# A global variable to hold the client message that'll be

# streamed back.

streamable_str = ""

async def mock_async_generator():

global streamable_str

for c in streamable_str:

yield c

await asyncio.sleep(0.05)

streamable_str = ""Now, we’ll need an endpoint to received the client message and update streamable_str. You could replace this logic with maybe adding the message to a Queue but we’ll keep it simple for now.

server/app.py

...

@app.post("/stream")

def post_user_message(payload: UserMessage):

global streamable_str

streamable_str = payload.message + " "

return Response("success")Next, the sse endpoint.

server/app.py

from sse_starlette import EventSourceResponse

...

@app.get("/stream")

def get_stream():

if streamable_str:

return EventSourceResponse(content=mock_async_generator())

return EventSourceResponse(content=iter(()))Let’s test it out

Update the streamable_str variable to hold some text:

server/app.py

...

streamable_str = "Hello World"

...uvicorn app:app --reloadOpen up yout browser and open up http://localhost:8000/stream, you should be able to see “Hello World” being streamed characted by character. Great! We now have a basic server that sends SSE Response. Let’s work on the front end now.

The Client

We’ll be using vite to setup a vanilla js frontend.

Setup

npm create vite@latest client -- --template vanillaCode

The vite command above will create a folder called ‘client’ with all our files. Let’s setup markup to render a simple chat app which contains a text input to collect user message & a chat window to view the messages.

client/index.html

<!doctype html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<link rel="icon" type="image/svg+xml" href="/vite.svg" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

<title>Vite App</title>

</head>

<body>

<main>

<section class="chatWindow">

<div class="chatLogWrapper">

<!-- The messages will be <li> elements inside this list -->

<ul class="chatLog"></ul>

</div>

<div class="chatInput">

<form method="POST" id="chatInputForm">

<input type="text" name="userMessage" id="userMessage" placeholder="Enter your message" required>

<button type="submit" id="sendMessageBtn">Send</button>

</form>

</div>

</section>

</main>

<script type="module" src="/main.js"></script>

</body>

</html>Next, the “chat” logic. This part is self explanatory:

- Get user message from form.

- Create a

lielement with the user message and add to the chatLogul - Send the user message to the server.

- Create a corresponding

lielement to store the server message

main.js

import { createChatMessageEl, createMessageIdPair } from "./utils.js"

let msgId;

const formEl = document.getElementById("chatInputForm");

const chatLogEl = document.getElementsByClassName("chatLog")[0];

formEl.addEventListener("submit", async function(e) {

e.preventDefault()

const formData = new FormData(e.target)

const userMessage = formData.get("userMessage");

msgId = createMessageIdPair();

const userMessageEl = createChatMessageEl(msgId.userMsgId)

userMessageEl.innerHTML = userMessage;

chatLogEl.appendChild(userMessageEl);

const result = await fetch('http://127.0.0.1:8000/stream', {

method: 'POST',

headers: {

'Accept': 'text',

'Content-Type': 'application/json'

},

body: JSON.stringify({message: userMessage})

})

const emptyAiResponseEl = createChatMessageEl(msgId.aiMsgId, true);

chatLogEl.appendChild(emptyAiResponseEl);

})createMessageIdPair is used to differentiate client and server messages. createChatMessageEl is a simple wrapper to create the actual <li> element.

Now, handling the streaming response received from the server:

- Get the previously created

lielement for server message. - Initialize connection to the SSE Event and setup handlers for updating the

lielement with event being streamed.

main.js

...

formEl.addEventListener("submit", async function(e) {

...

chatLogEl.appendChild(emptyAiResponseEl);

if (result.ok) {

const aiResponseEl = document.getElementById(msgId.aiMsgId);

aiResponseEl.style = ''

const eventSource = new EventSource('http://127.0.0.1:8000/stream')

eventSource.addEventListener("message", (event) => {

aiResponseEl.innerHTML += `${event.data}`

})

eventSource.addEventListener("error", (event) => {

console.error("error", event)

})

}

})We’re complete with both the Client and Server. Let’s spin up both apps

npm run devuvicorn app:app --reloadHead over to the client at http://localhost:5173 and send a message, you should get the same message streamed back.

Our app is functionally complete but we have one more thing to do. If you open up the network tab, you will notice mulitple calls being made to the SSE endpoint. This is the EventSource APIs retry mechanism in works. Once our client message is streamed back, the streamable_str is set to an empty value and thus no events are streamed back. We need to implement a way to identify once a message is full streamed so that client can close the connection instead of retrying. We can do this by tracking the stream progress and sending a custom event type notifying the end of stream:

app.py

from sse_starlette import EventSourceResponse, ServerSentEvent

...

streamable_str = ""

async def mock_async_generator():

global streamable_str

end = len(streamable_str)

for idx, c in enumerate(streamable_str):

if idx == end - 1:

yield ServerSentEvent(data=c, event="end")

break

yield ServerSentEvent(data=c, event="message")

await asyncio.sleep(0.05)

streamable_str = ""

...

Now, the server will sent the “end” event once a message is full streamed, we can handle it handle it accoridngly.

main.js

...

if (result.ok) {

...

eventSource.addEventListener("end", (event) => {

eventSource.close()

controlFormState()

})

...

}

...

Great, now app is fully functional. Here the repo with all that we’ve learnt: learn-server-sent-events. Contains bonus implementation of SSE in React as well.

Closing thoughts

Learning and implementing SSE has been fun and this being my first “blog”, I’ve done my best to document my learnings. If you have any questions, feel free to reach out @9akashnp8

Stay curious! Keep building!